Technology

The role of Technology

in advancing our understanding of the Learning Brain

February 10, 2021

Human imagination drives exploration, innovation, and discovery. While the physical world—the ocean, stars, fungi, flowers, and bacteria—continues to pique human curiosity on all continents, there is no more fascinating scientific field than the study of the human brain; as far as we know, the most complex organism in the universe.

©multipleStudio

Technology has advanced our understanding of the learning brain over the past few decades, offering insights that can improve education.

By Tracey Tokuhama-Espinosa

10/02/ 2021

·

- Share

by Sr. Garcia

Scientific discoveries have increased rapidly since the 1990s (dubbed the “decade of the brain” in the United States) thanks to huge investments in neuroimaging techniques, which allow a more accurate view of human brains as they perform tasks. For the first time in history, we can study the brains of healthy students in their classrooms as they learn, not just in laboratories under microscopes (Bevilacqua et al., 2019). This gives us better insights into how learning occurs, which, in turn, can help us improve our teaching. The new insights into the learning brain and how teaching influences its processes have already changed some of long-held beliefs about the best ways to educate. This fast learning curve is buttressed by even faster advancements in information and communication technologies (ICTs), particularly the Internet, which eases collaboration and knowledge exchange among researchers in the field, while also broadening access to that knowledge. Though rapid recent advances make this development seem new, in fact it goes back thousands of years.

Historical roots to understanding the learning brain

The Egyptians, Greeks, and Romans gave us the first glimpses into the physiology of the human brain. The first documented studies of the human brain date back to 1700 BC in Egypt, after which Greek natural philosophers speculated about the anatomical seat of cognitive, motor, and sensory functions and the origin of neural diseases (Crivellato and Ribatti, 2007). Alcmaeon (500 BC) was the first to identify the brain as a source of human consciousness, but Roman physicians, such as Galen (129–216 CE), were the first to document their experimental studies showing areas related to motor and sensory processing. In the fourteenth century, the Dutch scientist Jehan Yperman identified three functional areas of the brain, for visual, gustatory and olfactory senses, hearing, and memory. Thus began the search to “localize” brain functions. Where did “reasoning in the brain” occur? Where did “maths in the brain” occur? Why are some people smarter than others?

The first technological advances in the study of the brain related to standardization of practice, predominantly through autopsies and mainly on damaged brains. Magnus Hundt (1449–1519) published anatomical illustrations depicting the brain in terms of special senses and ventricular systems. Leonardo da Vinci’s (1452–1519) sketches of a centenarian’s brain and Andreas Vesalius’s (1514–1564) anatomical work not only created detailed visual records but also led to consistent naming of specific brain areas, creating common terms of reference and vocabulary. Among the most complete early depictions of the brain were architect Christopher Wren’s engravings for Thomas Willis’ (1664) Cerebri Anatome [The Anatomy of the Brain] (Tokuhama-Espinosa, 2010).

The Medieval Age in western Europe coincided with the Islamic Renaissance (7th–13th centuries), when Middle Eastern thinkers contributed to great historical advancements about human learning and physiology (Clarke, Dewhurst, and Aminoff, 1996). In Persia, prominent scholars such as Avicenna, Rhazes, and Jorjani established medical practice based on observational data using the premier technology of the day: the trained eye. Ali ibn Abbas Majusi Ahvazi was a renowned Persian scientist of this era who wrote a large medical encyclopaedia entitled “The Perfect Book of the Art of Medicine”. It comprised 20 chapters, each of which began with an anatomical discussion and not only included information on diseases and treatments, but also offered detailed descriptions, including of cranial nerves and sutures, inching humankind closer to a better understanding of the complexities of the human brain.

Christopher Wren’s engravings for Thomas Willis’ Cerebri Anatome (1664). The brain stem with nerves and vessels, including the circle of Willis, is depicted by Wren in a similar fashion to an architectural drawing.

Towards a scientific study of the learning brain

Al-Haytham (Latinized as Alhazen), (965–1039), was an Arab mathematician, psychologist, and physicist of the Islamic Golden Age. Biographers call him the “inventor of the scientific method”. He established that learning is generated by our sensory perceptions of the world, confirming Aristotle’s original idea from 800 years earlier. In this process, our senses feed information to our memory, and we compare new with old, detect patterns and novelty, and base new learning on past associations. While the neural mechanisms for memory were not completely understood at the time, this work laid the foundations for our understanding of memory systems, which were later acknowledged as one of two vital foundations of all learning, the other being attention. Without both well-functioning memory and attention systems, there can be no learning (Tokuhama-Espinosa, 2017).

It wasn’t until the seventeenth century, however, that the invention of the microscope and the discovery of bioelectricity showed how the brain works through electrical and chemical exchanges. In 1754, Johann Gottlob Kruger suggested the use of electro-convulsive therapy for mental illness, indicating an understanding of how human behaviour was controlled by electrical and chemical changes and contact with the environment. These insights into the brain showed that electricity could change the chemistry of the brain, opening the way to a new field of molecular biology, which was then able to establish that new learning could be measured through increases in “white matter” when new connections are made in the brain.

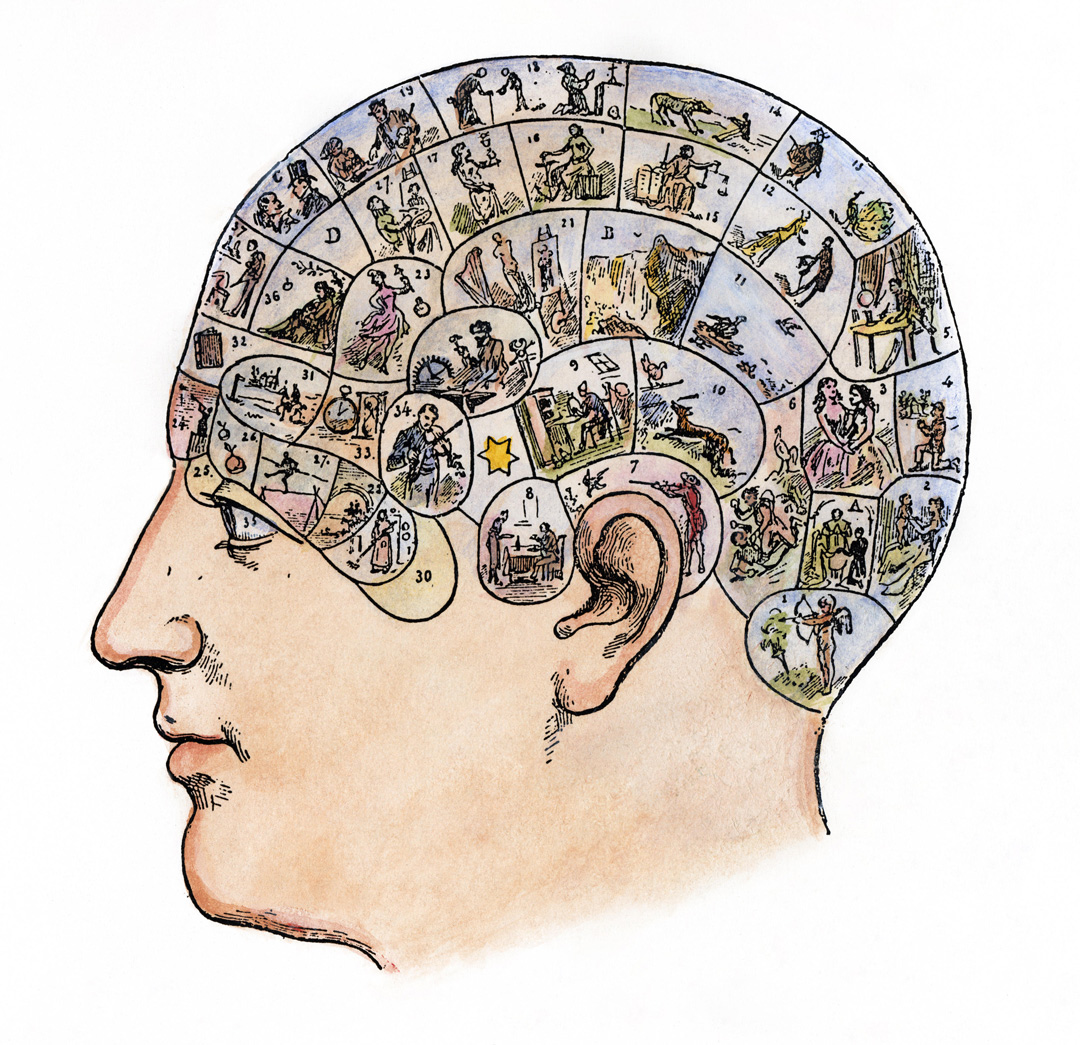

The final decades of eighteenth century witnessed a growth of interest in the localization of brain functions, but not always based on technological discoveries. In 1792, Franz Joseph Gall and J. G. Spurzheim advocated the idea that different brain regions were responsible for different behavioural and intellectual functions, producing bumps and indentations on the skull. They thought that the more highly developed areas would require more volume of the cortex and the part of the skull covering this area would bulge outward and create a “bump” on the person’s head (Fancher, 1979). Phrenology used no technological tools and was based on an individual’s interpretation on feeling a person’s head shape. Phrenology has since been discredited, but it did teach a key lesson in the learning sciences: simplistic answers are rarely the whole truth when it comes to the brain. Human personality and thought processes are far more complex than once believed. This ushered in an era of acceptance, and even celebration, of the complicated neural networks that underlie cognition.

Many simplistic explanations of the brain that followed were motivated by profit-making schemes of “brain-based teaching” products. People paid large sums of money to learn about pre-determined “learning styles” (a neuromyth), to distinguish between right- or left-brained thinkers (a neuromyth), and even how to teach to the differences in boys’ and girls’ brains (a neuromyth) (Dekker, Lee, Howard-Jones, and Jolles, 2012).

By the Industrial Revolution, human observation, dissection, and interventions became more commonplace and the new field of psychology began contributing to knowledge about how people learn (David Hartley published Observations of Man in 1749, the first English work using the word “psychology”). A better understanding of the mind led to increased curiosity about the brain, the physical organ behind learning. Speculation occurred as to the underlying factors contributing to the learning difficulties some children faced. Using the factory as a metaphor, in which one material went in and another came out, more and more people wondered just how the “black box” of learning actually worked.

French phrenological chart, 19th century.

Seeing the invisible

During the Industrial Revolution, people began to think more about the role of neurons—cells in the nervous system—in the learning process. Cell theory states that the cell is the basic structure in all living organisms, including humans. This idea was suggested more than 100 years earlier by Robert Hooke in 1665 but was rejected due to lack of proof at the time. A contemporary of Hooke’s, Anton van Leeuwenhoek, advanced the concept of cell biology by proving that cells were living organisms. It wasn’t, however, until the 1830s that German scholars Jakob Schleiden (1838) and Theodor Schwann (1838) discovered individual units in plant and animal bodies, which they called “cells”. Although cell theory quickly became universally accepted after 1839, most scientists of the nineteenth century, limited by the technology of the times and unable to see most cells with the naked eye, believed that the nervous system was a continuous reticulum of fibres, not a system of cellular networks. This is yet another example of how wonderful ideas in science are often discovered before technology catches up to confirm them.

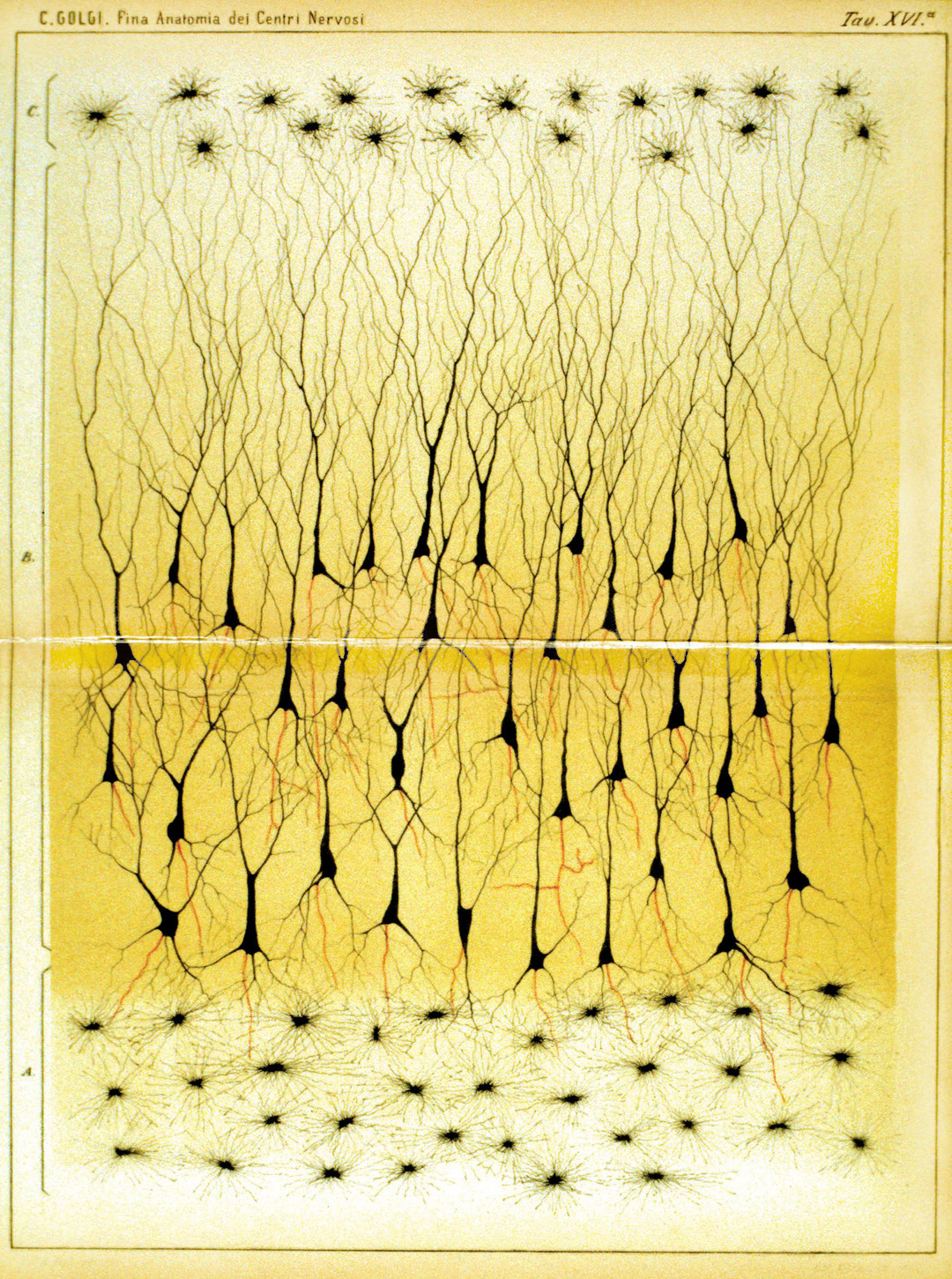

Another advance that made the fundamental building blocks of learning observable occurred in 1873, when an Italian scientist, Camillo Golgi, developed a staining process that made neurons and their connections easier to study under a microscope. Thanks to this, in 1886 Wilhelm His and August Forel proposed that the neuron and its connections might be an independent unit within the nervous system (Evans-Martin, 2010).

Part of a vertical section through the human pes hippocampi majoris. Source: Camillo Golgi (1885), Sulla fina anatomia degli organi centrali del sistema nervoso [On the fine anatomy of the central organs of the nervous system], Reggio-Emilia: S. Calderini e Figlio. Public domain.

By the end of the nineteenth century, Francis Galton’s studies provided the basis for a statistical approach to measuring mental abilities, including intelligence. Galton, one of the first British psychometricians, was enamoured with the “normal distribution” and its success in describing many physical phenomena, which persuaded him that it would permeate all manner of other measurements and in particular mental measurements (Goldstein, 2012). Following Galton’s work, researchers such as Alfred Binet (Binet and Simon, 1904) and David Wechsler (Wechsler, 1939) developed instruments to measure mental abilities, which were later formed the first intelligence tests.

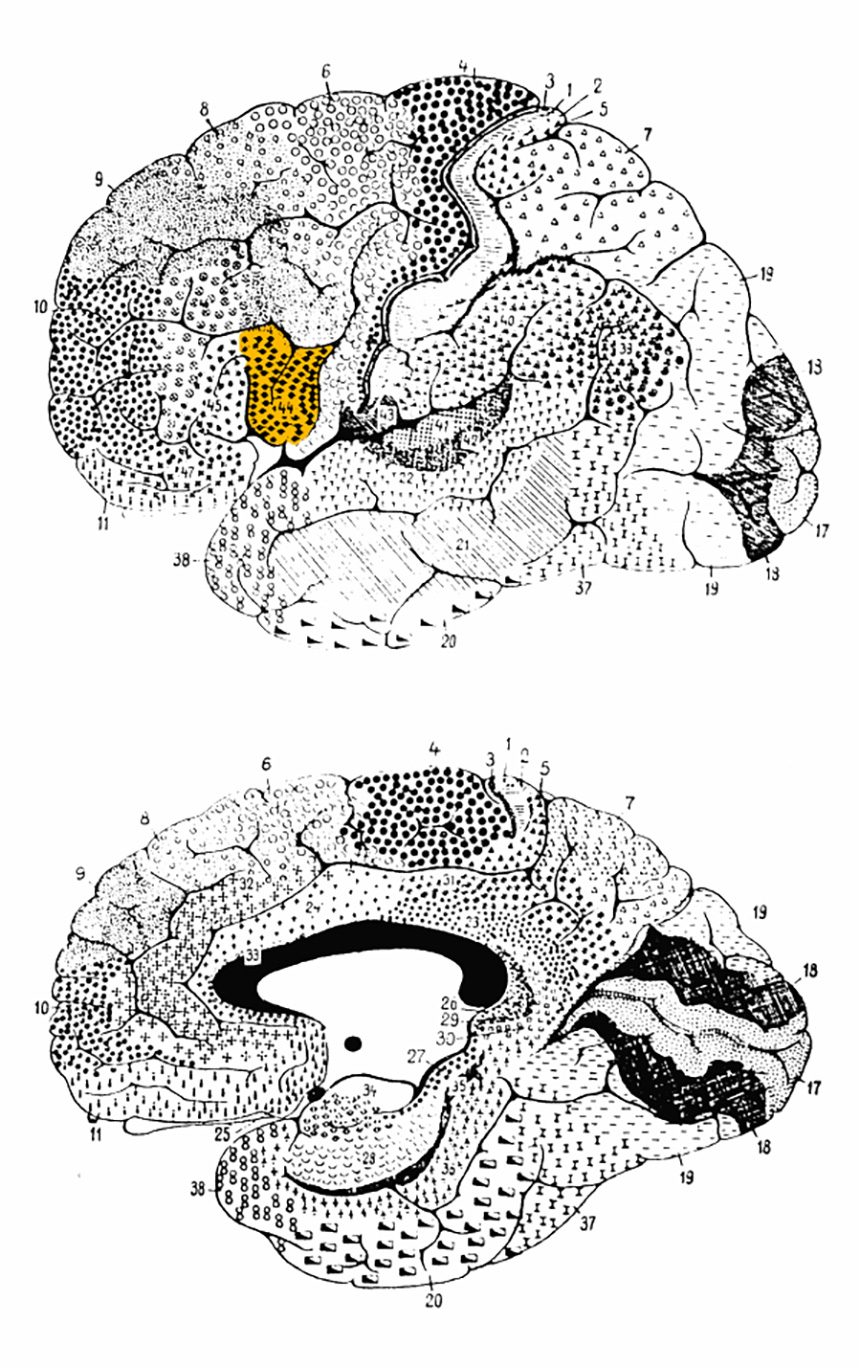

Discoveries related to cortical function continued in the early twentieth century. In 1908, Korbinian Brodmann drew a cortical map of the brain based on comparative studies of mammalian cortex. He identified 52 cortical areas known today as Brodmann’s Areas. The advancement of new technologies in the early 1900s assisted researchers in understanding the neural activity in the human brain.

In 1929, Hans Berger recorded the electrical activity of neurons using electrodes placed on the scalp and called the record of the signals an “electroencephalogram” (EEG) (Haas, 2003). Six years later, in 1935, Edgar Douglas Adrian verified that this information is transferred between neurons via trains of electrical activity, which vary in frequency based on the intensity of the stimulus. This laid the foundation for the future discovery of electrical synapses, hypothesized by Golgi and Ramón y Cajal in 1909, well before their existence was confirmed in the 1950s (Kandel, Schwartz, and Jessell, 2000).

The early 1900s also produced valuable new understandings about the connection between the physical structures of the brain and psychological processes of learning and memory. Karl Lashley proposed the theory of equipotentiality, namely the ability of any neuron to do the job of any other (Lashley, 1930), which was in stark contrast to the views of the localizationalists of the time, who thought that each brain area specialized in a particular function. Today, it is accepted that both were partially right, but neither was entirely correct.

Investigation of the neurobiological bases of learning was begun in earnest by Donald Hebb in the first half of 1900s. Hebb’s now famous saying that “neurons that fire together wire together” (Hebb, 1949), challenged both Lashley and the localizationists. Hebb was one of the first to suggest that neurons generally connect to their closer neighbours, which introduced a new vision of neuronal networks, as opposed to simple individual cell activity. This was only shown to be true in 2009 by the Connectome Project (Sporn, 2012). This concept had valuable implications for classroom learning as it offered a neurophysiological explanation of why certain types of memories seemed to cluster together in recall activities.

Brodmann area 44. Source: Cytoarchitectonics, adapted from Brodmann (1908). Public domain.

The emergence of science in understanding the learning brain

The early twentieth century saw new hypotheses about human learning drawn from animal research. Ivan Pavlov’s studies on conditioned reflexes in dogs laid the foundation for the application of conditioning techniques in behaviour modification in classrooms. Pavlov’s work provided John Watson with a method for studying behaviour and a way to theorize how to control and modify it (Schultz and Schultz, 2011). In 1913, Watson introduced the term behaviourism and served as the most vocal advocate for the behaviourist perspective in the early part of the century (Watson, 1913).

In 1958, Mark R. Rosenzweig and his colleagues at the University of California, Berkeley published the results of experiments on rats that opened a new line of investigation related to the neurobiological basis for behaviour and the influence of “enriched” environments (Krech, Rosenzweig, and Bennett, 1958). This gave birth to a new business of “early stimulation classes” for children, in which babies were taught to recognize shapes on flash cards. These earlier works were mistakenly seen by the public as supporting early stimulation classes for infants, when they were really a condemnation of poor or deprived environments. Six years later, Marian Diamond and her colleagues, also at Berkeley, published a study which showed that exposure to “enriched” experiences changed the dendritic arborization and functional connectivity of brain cell networks in rats (Diamond, Krech, and Rosenzweig, 1964). Building on Diamond’s work, William Greenough explored the plasticity of brain or brain capacity to change with experience.

Science and technology interface to advance the understanding of the learning brain

As interest in the brain grew among the public, the United States declared the 1990s the Decade of the Brain. This announcement was accompanied by new funding and investment in the learning sciences as a whole, and in technology in particular. The advancement of new brain-imaging techniques (including refined functional magnetic resonance imaging [fMRI]) profoundly influenced scholars to think about the practical applications of brain research for educational policy and practice. More than 32,000 peer-reviewed articles published between 1990 and 2000 used MRI or fMRI scans to measure some element of learning. However, not all of these studies focused on human learning. In fact, only a handful of studies during this time used human subjects, and even fewer were conducted on school-aged children. The lack of evidence directly gathered from school-aged children, along with the promotion of neuromyths, prompted caution about the true utility of neuroscience research and teaching.

Neuroimaging: A critical technological breakthrough

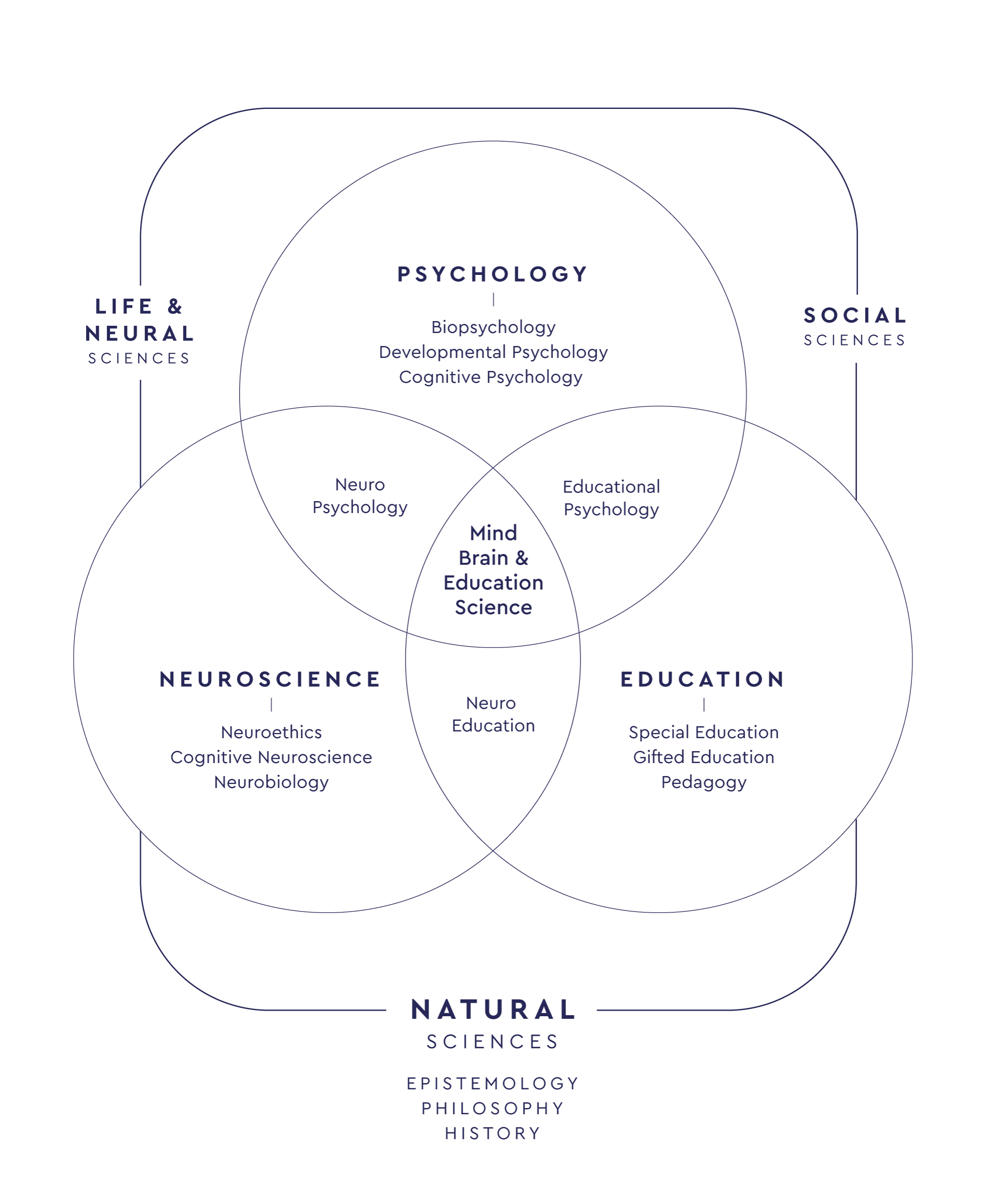

Advancements in neuroimaging techniques from 1990 to the present, in terms of both measurement accuracy and data analysis, marked key milestones in the development of the learning sciences, especially Mind, Brain, and Education (MBE) science. Technology began to link observable learning in classrooms to molecular-level changes in brains in laboratories to better understand how the teaching-learning dynamic actually works.

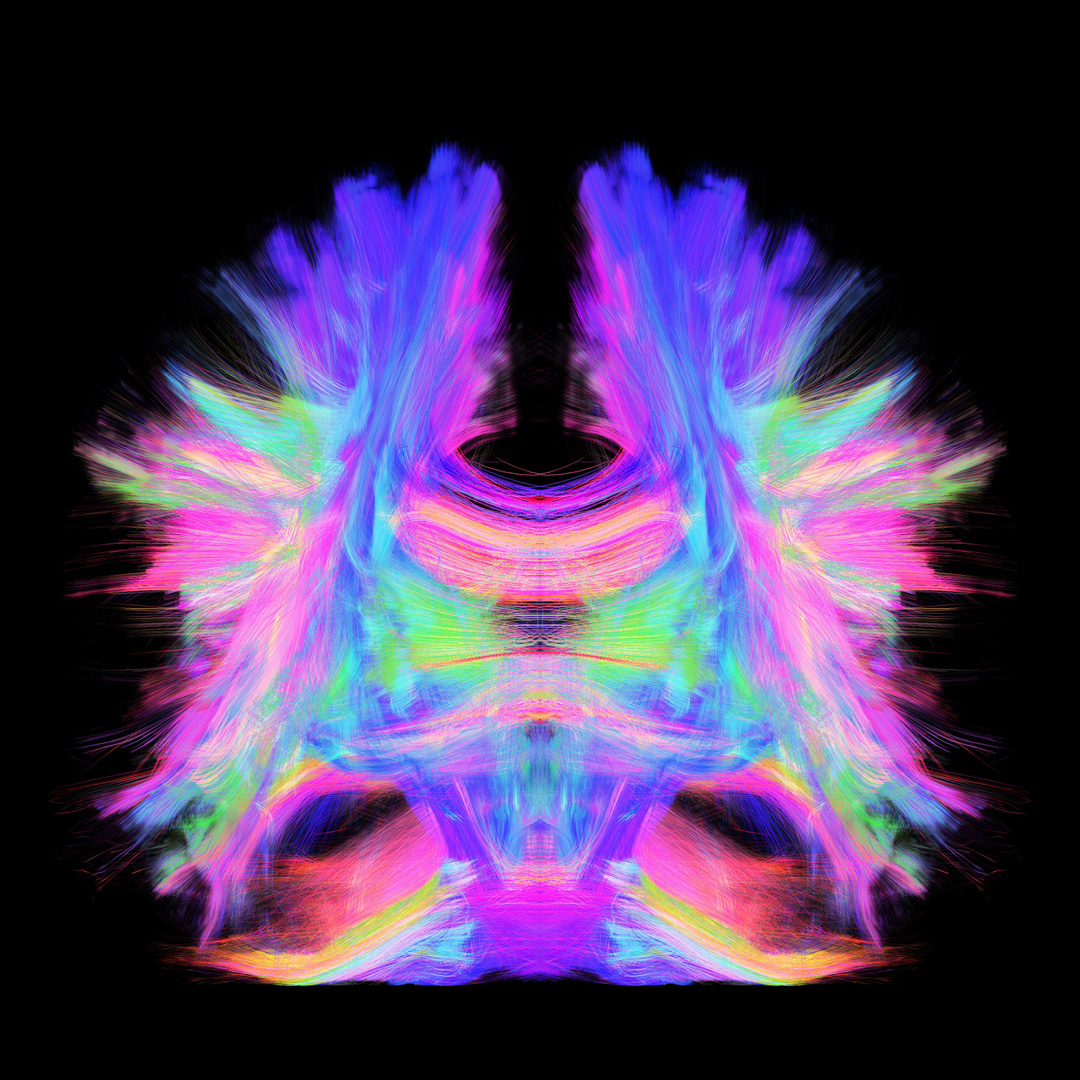

The Human Connectome Project (HCP), a US National Institutes of Health initiative launched in 2009 to “construct a map of the complete structural and functional neural connections in vivo within and across individual” (Bookheimer et al., 2019), not only financed important international studies but also gave the general public a new view of the complexity of human thinking through publicly available images. The project includes researchers from dozens of institutions, including Washington University, University of Minnesota, Harvard University, University of California at Los Angeles, University of California Berkeley, St. Louis University, Indiana University, D’Annunzio University, University of Warwick, University of Oxford, and the Ernst Strüngmann Institute. The HCP undertook a systematic effort to map macroscopic human brain circuits and their relationship to behaviour in a large population of healthy humans (Van Essen, 2013).

By combining existing neuroimaging tools, the HCP created colourful, detailed neural circuitry maps that told the visual story of reading problems, how arithmetic is processed in healthy brains, and mapped hundreds of other cognitive skills and sub-skills that were previously invisible to the naked eye. The findings were made popular among the general public by Sebastian Seung in his book, Connectome: How the Brain’s Wiring Makes Us Who We Are (Seung, 2012).

Practical interventions based on neuroscience for the classroom

Thanks to the Connectome, the learning sciences extended beyond the theoretical and turned practical.

Learning technology in multiple forms, including apps, video gaming and augmented reality, as well as platforms that offered teachers services to improve student learning, began to increase exponentially in the twenty-first century, creating by 2018 an industry worth $6 billion, out of the total of $21 billion spent on computers, video and gaming (Entertainment Software Association, 2018). Several manufacturers claimed to work with cognitive neuroscientists to create unique instructional designs in educational programming, but only a handful of studies supported these claims.

Nonetheless, the potential for marrying the benefits of technology and neuroscience became clear in the second decade of the twenty-first century. An OECD (2017) report suggested that two areas of necessary growth for teacher education included technology and neuroscience. Combining technology with the learning sciences poses an interesting challenge and has generated multiple research studies.

Perhaps the most important advances in the second decade of the current century have been in research. Initial studies have directly gathered neuroscientific data from real-live classrooms, with real children, using both psychological and observational methods, as well as molecular/neuroscientific tools. Studies by researchers at the Queensland Brain Institute, for example, research multiple aspects of learning, such as “Brain-to-Brain Synchrony and Learning Outcomes [that] Vary by Student–Teacher Dynamics: Evidence from a Real-world Classroom Electroencephalograph” (Bevilacqua, 2018).

Such ground-breaking research appears to explicitly show the benefits of combining neuroscientific research with psycho-social interactions in real classrooms, paving the way for a new kind of teacher training based on evidence about the brain and teaching.

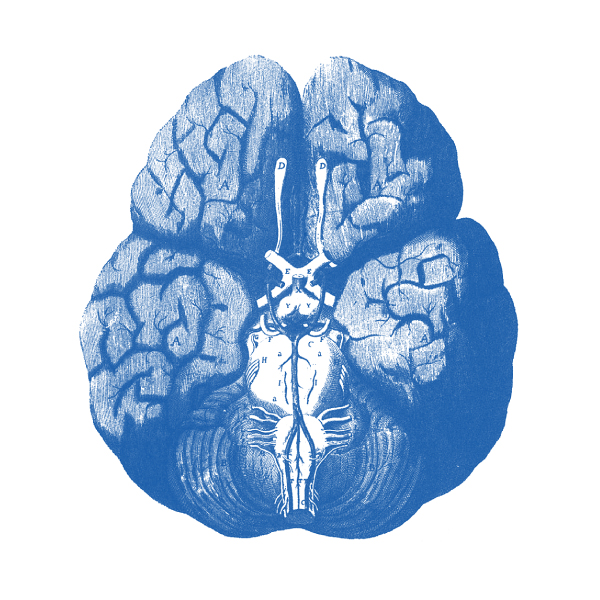

Axonal nerve fibers in the real brain.

Where are we headed now?

Non-invasive neuroimaging techniques have proven an invaluable tool for understanding brain development and functional reorganization in typical and atypical child populations, from infancy through adolescence (Dick et al., 2013). Just as the first microscopes in the 1800s gave way to electron microscopes in the 1930s, and Golgi’s stains gave way to Connectome imagery, the future promises an even clearer vision of the brain. Emerging technologies can make unique contributions to answering longstanding educational questions that are not amenable to traditional research methods in psychology and education, and help tailor pedagogical curricula towards pupils’ individual neurocognitive abilities (Fischer et al., 2010; Howard-Jones, 2007). There is no question that technology will make considerable contributions to uncovering the mechanisms underlying neural and cognitive development (Dick et al., 2013).

At the opening of the third decade of the new millenium, we are cautiously optimistic. Technology continues to advance, most notably in areas of neuroimaging where tools are becoming less expensive, more accurate and more widely available. While there is no such thing as a machine that can read our minds, there are tools to facilitate better learning, using algorithmic patterns which permit students to rehearse basic cognitive skills. Improvements in communication technologies are also bridging communities and advancing the global knowledge base on how the brain learns.

The next generation of scholars is challenged by the same goals as the ancient sages; to to make the invisible visible. In doing so, they must enlighten the public about the ethical use of credible neuroscientific knowledge to advance human learning and ensure equity of effective lifelong learning opportunities.

by Sr. Garcia